LSF Alphabet Translator.

This project aims to create a live LSF - Langue des Signes Française - equivalent to the American Sign Language - translator as an exercice for the Fast AI Python library.

Dataset creation

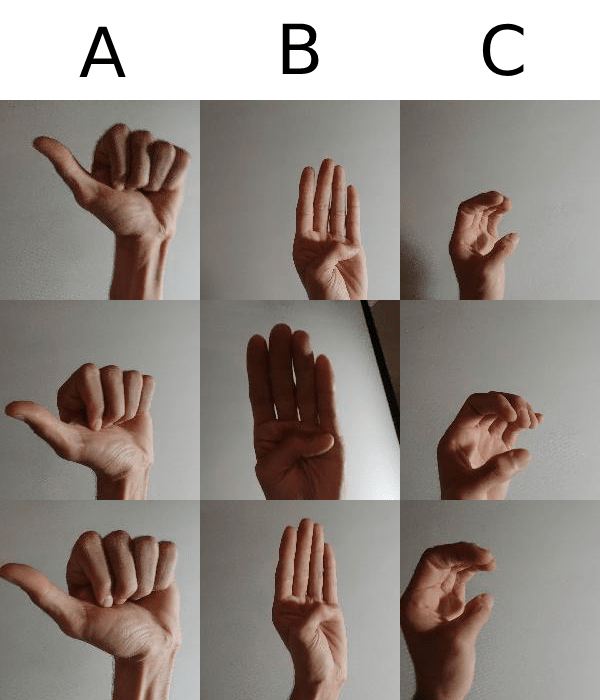

To create the dataset, I recorded myself signing each of the alphabet letters for approximatively 3 minutes and at different angles and light exposures.

Then using a simple Python script I converted the videos to a set of images:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 |

import cv2 NAME = "A" vidcap = cv2.VideoCapture(NAME + ".mp4") def getFrame(sec): """ save a frame """ vidcap.set(cv2.CAP_PROP_POS_MSEC, sec * 1000) hasFrames, image = vidcap.read() if hasFrames: cv2.imwrite( NAME + "/" + NAME + "-" + str(count) + ".jpg", image ) # save frame as JPG file return hasFrames sec = 0 frameRate = 0.5 # // it will capture an image each 0.5 second count = 1 success = getFrame(sec) while success: count = count + 1 sec = sec + frameRate sec = round(sec, 2) success = getFrame(sec) |

Model Training and Transfer Learning

My model needed to learn first how to recognize hands, so I decided to train it against the EgoHands dataset that contains as many as 4 800 labelled images of first person interactions between two people.

<work="in progress" / >